- Published on

Supervised Fine-tuning for Large Language Models

- Authors

- Name

- Rahul kadam

- @rahul_jalindar

Last Modified : Wednesday, May 01, 2024

Table of content

What is Supervised Fine-tuning

Supervised Fine-tuning which is also know as SFT, is a method to fine tune a large language model in supervised manner.

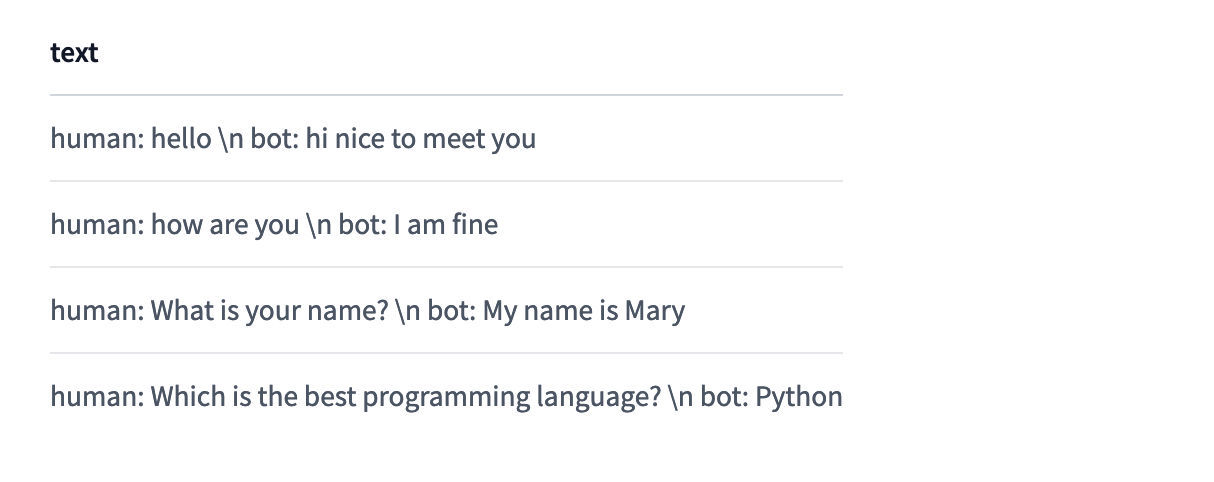

For eg, look at the following image:

Above image contains SFT datatset samples, this dataset tells language models what to respond when asked a question in that manner like what is the best programming language?, the response will be python not a Javascript or any other language, that is a supervised fine-tuning.

When to use it

Supervised fine-tuning is used when you want your language model to follow certain instruction. This yields in model being more correct in responses, coherence, and model performace also increases.

This was first used in InstructGPT, and then in it was also used in ChatGPT, as well as in LlaMA-2.

Create SFT dataset

As I explained it previously, SFT depends on dataset, so it's accuracy is also depends on quality of dataset.

We need to include human verification to check the quality of dataset.

How to implement it

Supervised Fine-tuning and pretraining languge models are similar, in pretraining we pass traning data for next token prediction and in SFT we pass SFT dataset the same way for next token prediction.

We can use SFTTrainer from Transformer Reinforcement Learning

from datasets import load_dataset

from trl import SFTTrainer

dataset = load_dataset("imdb", split="train")

trainer = SFTTrainer(

"facebook/opt-350m",

train_dataset=dataset,

dataset_text_field="text",

max_seq_length=512,

)

trainer.train()

With this few lines you can train your own SFT.